Bionic Hand Control with Real-Time B-Mode Ultrasound Web AI Vision

DOI:

https://doi.org/10.62487/vjzf7254Keywords:

Bionics, B-mode Ultrasound, Web AI Vision, Computer Vision, Machine Learning, Artificial Intelligence, MobileNetAbstract

Aim: This basic research study aimed to assess the ability of Web AI Vision to classify anatomical movement patterns in real-time B-mode ultrasound scans for controlling a virtual bionic limb. Methods: A MobileNetV2 model, implemented via the TensorFlow.js library, was used for transfer learning and feature extraction from 400 B-mode ultrasound images of the distal forearm of one individual participant, corresponding to four different hand positions: 100 images of a fist position, 100 images of thumb palmar abduction, 100 images of a fist with an extended forefinger, and 100 images of an open palm. Results: After 32 epochs of training with a learning rate of 0.001 and a batch size of 16, the model achieved 100% validation accuracy, 100% test accuracy, and a test loss (crossentropy) of 0.0067 in differentiating ultrasound patterns associated with specific hand positions. During manual testing with 40 ultrasound images excluded from training, validation, and testing, the AI was able to correctly predict the hand position in all 40 cases (100%), with a mean predicted probability of 98.9% (SD ± 0.6). When tested with B-mode cine loops and live ultrasound scanning, the model successfully performed real-time predictions with a 20 ms interval between predictions, achieving 50 predictions per second. Conclusion: This study demonstrated the ability of Web AI Vision to classify anatomical movement patterns in real-time B-mode ultrasound scans for controlling a virtual bionic limb. Such ultrasound- and Web AI-powered bionic limbs can be easily and automatically retrained and recalibrated in a privacy-safe manner on the client side, within a web environment, and without extensive computational costs. Using the same ultrasound scanner that controls the limb, patients can efficiently adjust the Web AI Vision model with new B-mode scans as needed, without relying on external services. The advantages of this combination warrant further research into AI-powered muscle movement analysis and the utilization of ultrasound-powered Web AI in rehabilitation medicine, neuromuscular disease management, and advanced prosthetic control for amputees.

Bionic Hand Control with

Ultrasound AI Vision

This Web AI model is trained on grayscale 2D ultrasound scans of the forearm, captured during four different hand positions: Thumb, Forefinger, Open Palm, and Fist.

Click one of the buttons below —each displaying a photo of a specific hand position— to load the corresponding ultrasound scan. The AI model will then predict and display the virtual bionic hand’s movement in real time

Interval between predictions: 20 ms

Original Article

Introduction

Limb loss or dysfunction is a critical condition for both clinicians and patients, driving scientists and engineers to develop effective replacement solutions. Such replacements can take the form of classical prosthetics or synthetic limb substitutes with enhanced functional capabilities. As health science advances, prosthetics are continuously evolving into bionics - a field that seeks to integrate mechanical functions with biological entities by mimicking native physiological processes1.

B-Mode Ultrasound for Bionics

Current research in prosthetic limb technology focuses on enhancing prostheses with various biological feedback mechanisms, primarily electromyography, ultrasound, and custom sensors, to convert biological signals into mechanical movements. Each of these methods has its own advantages and limitations. Among them, B-mode (brightness mode) ultrasound has been investigated for over 20 years2, evolving from basic parametric still-image analysis3 to advanced convolutional neural networks capable of analyzing real-time B-mode ultrasound scans to predict prosthetic limb movements4.

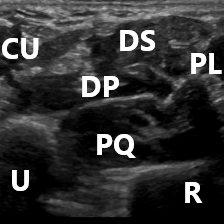

B-mode (brightness mode) ultrasound is a well-established and widely used imaging technique in both clinical practice and medical research, with a history spanning over 80 years5. It produces two-dimensional grayscale images based on the intensity of reflected high-frequency sound waves (Figure 1).

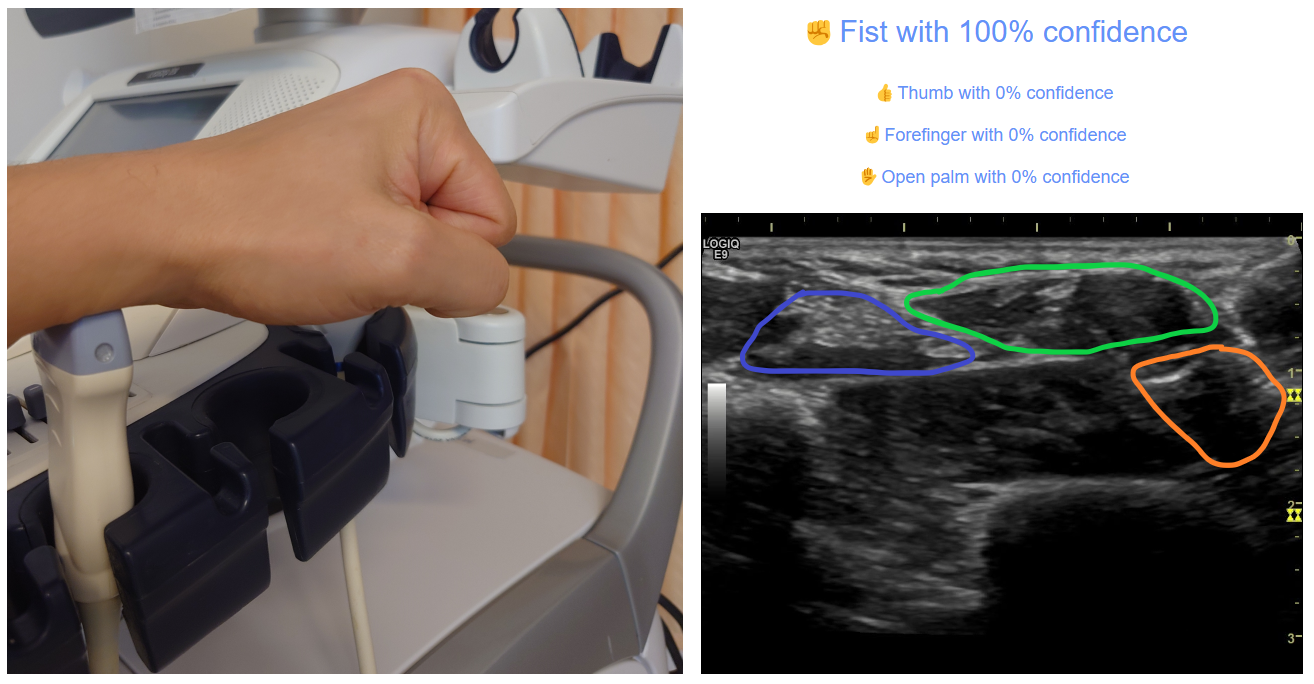

Figure 1A. Ultrasound transducer placed over the forearm, capturing a real-time anatomical grayscale image. Downward arrow: emitted sound wave; upward arrow (toward the transducer): returning echo used for image formation.

Figure 1B. B-mode ultrasound scan of the anterior forearm, bilinearly resized to 224×224 pixels for model training and prediction. Labeled anatomical structures: U – Ulna, R – Radius, PQ – Musculus pronator quadratus, DP – Flexor digitorum profundus, PL – Flexor pollicis longus, DS – Flexor digitorum superficialis, CU – Flexor carpi ulnaris.

These waves are emitted by a specialized sensor called an ultrasound transducer and travel through the body. As they encounter different tissues, they are reflected back to the transducer at varying intensities, depending on the acoustic properties of the tissues. The returning echoes are then processed to generate cross-sectional images, where brighter areas correspond to stronger reflections (e.g., bone or fibrous tissue) and darker areas represent weaker reflections (e.g., muscle or fluid). This non-invasive method provides real-time anatomical information and is particularly valuable for musculoskeletal, vascular, and soft tissue assessment.

The primary advantage of B-mode ultrasound in bionics is its ability to provide detailed real-time anatomical insights into limb movements6. However, its main limitation is the large volume of information it generates, which requires significant preprocessing compared to electromyography or custom sensors. This computational burden necessitates machine learning algorithms to operate more efficiently than electromyography or custom sensors in order to achieve low-latency conversion of real-time ultrasound images into bionic control signals, enabling real-time prosthetic movements.

Despite advancements in transforming B-mode ultrasound scans into movement signals for bionic limbs, individual calibration remains a challenge for B-mode ultrasound-powered bionic devices4. Physiological variations - such as sensor placement changes or fluid shifts in the limb - can reduce prediction accuracy, necessitating frequent retraining or recalibration of the machine learning model. Traditional deep learning models, such as custom convolutional neural networks, require significant computational resources and time to train and adapt to individual needs, making these devices highly customized and reliant on external services4.

Web AI for Bionics

Web AI provides a low-computational-cost solution for developing and deploying machine learning models in a web-based environment. Powered by frameworks like TensorFlow.js7, Web AI enables on-device AI processing, eliminating the need for third-party data transfers. In image classification tasks, it leverages transfer learning and pre-trained models to minimize computational requirements, allowing real-time image classification with low latency on a wide range of client devices, from desktops to smartphones.

Recent research8 9 10 11 12 13 14 has demonstrated the validity of transfer learning-powered AI models for medical image classification, showing high accuracy even with relatively small datasets. This suggests that Web AI could be a scalable and efficient alternative for real-time ultrasound-based bionic limb control.

Hypothesis

AI Vision, with its pixel-by-pixel memory from training datasets, can objectively assess complex ultrasound images with high accuracy. Specifically, ultrasound AI Vision can analyze musculoskeletal properties of a limb, extract ultrasonic patterns associated with specific hand positions, and predict the bionic limb's movement based on previously unseen ultrasound data4. However, the high level of customization required for these models, which must be repeatedly trained and retrained to meet individual needs, makes ultrasound-powered bionic limbs both costly and computationally demanding4.

Web AI has the potential to overcome this limitation by enabling fully automated training and retraining of B-mode ultrasound models without human intervention in feature extraction or model updates, entirely on the client side and without requiring extensive computational power.

Aim

This study aimed to assess the ability of Web AI Vision to classify anatomical movement patterns in real-time B-mode ultrasound scans for controlling a virtual bionic limb.

Material and Methods

This study was designed as basic research and involved data collection, preprocessing, model development, and testing.

Data Collection

The dataset consisted of still ultrasound images and cine loops of the distal forearm from a single research participant - the author (YR). The ultrasound images were acquired following previously described sonoanatomical protocols for this region15. Grayscale ultrasound imaging was performed in axial view, assessing the following anatomic landmarks from bottom to top (Figure 1):

- Ulna

- Radius

- Musculus pronator quadratus

- Flexor digitorum profundus

- Flexor pollicis longus

- Flexor digitorum superficialis

- Flexor carpi ulnaris

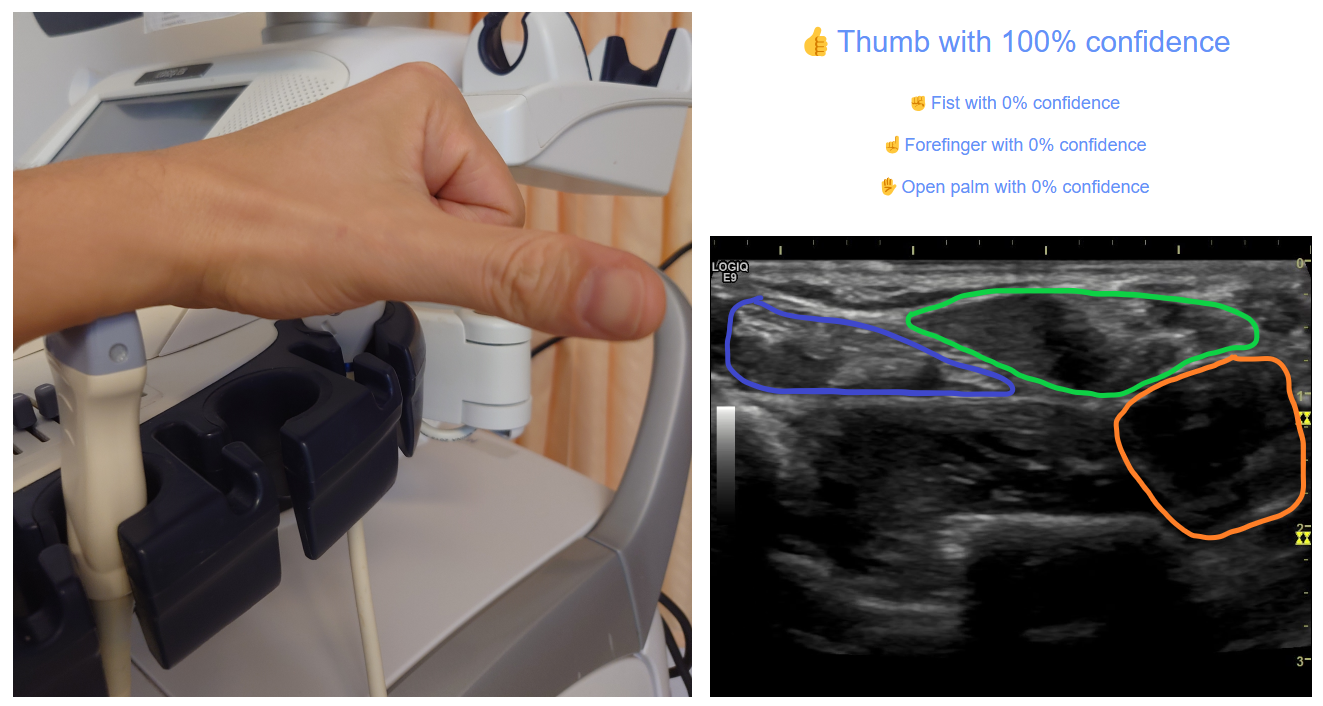

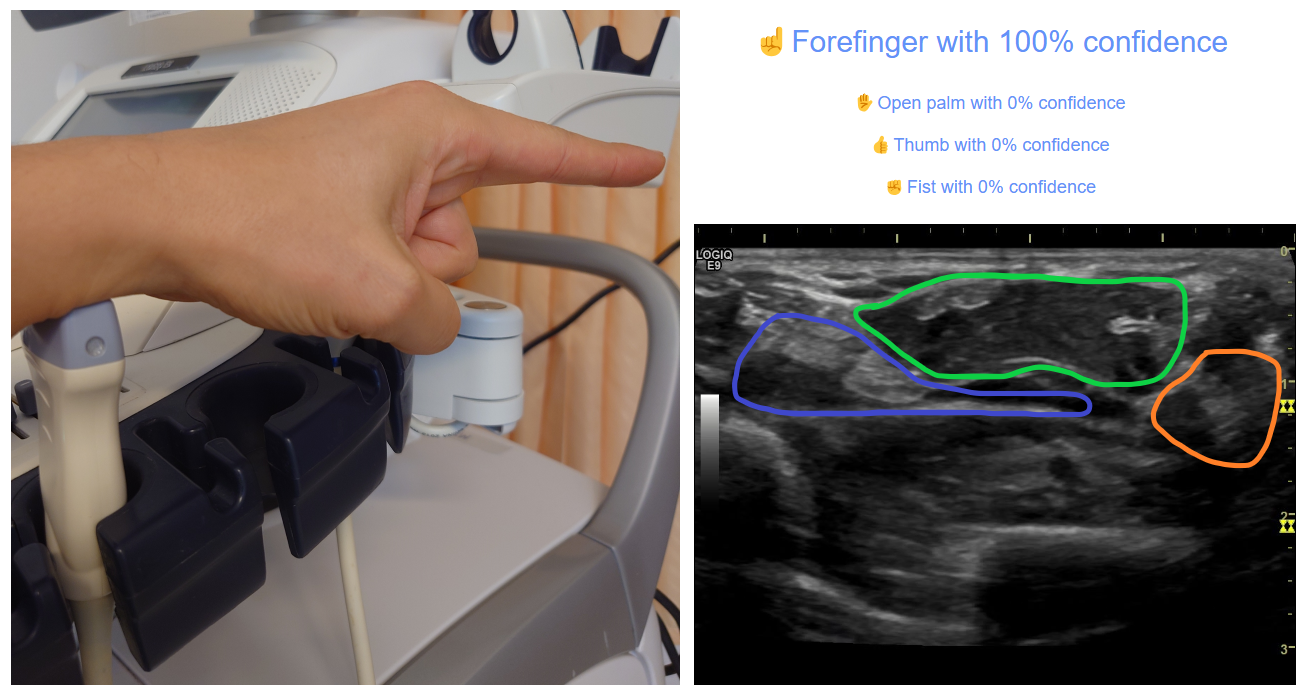

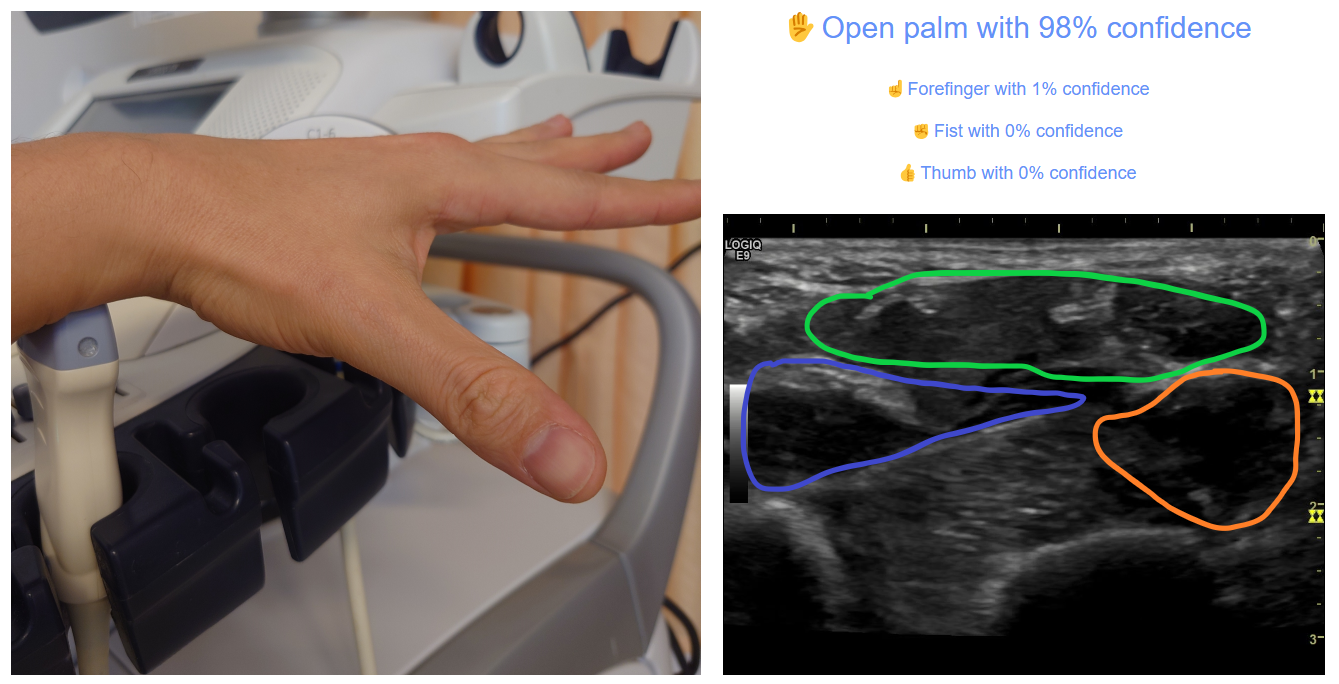

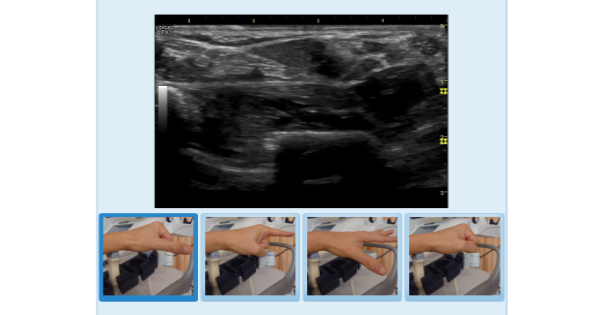

A linear GE 9L transducer (LOGIQ E9) was mounted on the ultrasound scanner, facing upward, while the left arm of the research participant was placed on top of it. This non-fixed transducer setup introduced additional movements, simulating real-life instability and challenging the Web AI model's ability to perform under dynamic sensor conditions - Figure 2. “Small parts” presets were used with a depth setting of 3 cm for the entire imaging session. A total of 400 still images were collected for training, 40 still images were reserved for manual testing, 4 cine loops were used for real-time manual testing, with equal distribution among the four hand positions:

1. Fist position

2. Palmar abduction of the thumb

3. Fist with an extended forefinger

4. Extended palm

A. Extended Thumb Position: Ultrasound shows contracted flexor digitorum profundus and flexor digitorum superficialis, appearing as hyperechoic tendons, while the relaxed flexor pollicis longus is visualized as a hypoechoic muscle.

B. Extended Forefinger Position: Ultrasound shows partially relaxed flexor digitorum profundus and flexor digitorum superficialis, appearing as a mix of hypoechoic muscle and hyperechoic tendons, while the contracted flexor pollicis longus is visualized as a predominantly hyperechoic structure with a remaining hypoechoic muscle component

C. Open Palm Position: Ultrasound shows relaxed flexor digitorum profundus, flexor digitorum superficialis, and flexor pollicis longus, all visualized as predominantly hypoechoic muscles.

D. Fist Position: Ultrasound shows contracted flexor digitorum profundus, flexor digitorum superficialis, and flexor pollicis longus, all visualized as predominantly hyperechoic tendons or contracted hyperechoic muscles.

Figure 2: Real-time predictions made by the model using a previously unseen B-mode cine loops of the forearm.

Additionally, the model was tested in live scanning by capturing real-time ultrasound video and transmitting it directly to the video classifier.

Data Preprocessing

All images were manually cropped to remove artifacts, retaining only the grayscale ultrasound image of the forearm. Each image was automatically resized to 224×224 pixels using bilinear interpolation during input for model training and prediction.

To ensure consistent input scaling, pixel values were normalized by dividing all values by 255.

AI vision Model Development

The full MobileNetV216 layers model was used for transfer learning and implemented via the TensorFlow.js7 library. MobileNetV2 was chosen for its efficiency and ability to perform well with smaller datasets while still achieving high accuracy in image classification. Its lightweight architecture enables faster processing without compromising accuracy, making it particularly suitable for medical image analysis. The model’s performance has been validated in previous medical research8 9 10 11 12 13 14. Additionally, this approach allowed the model to be trained and deployed directly on the client side, ensuring that no data was transferred to third-party servers, thereby maintaining complete data privacy.

We used MobileNetV2’s global average pooling layer for feature extraction, then appended a custom multilayer perceptron ((dense layers of 1024, 512, 256, and 128 units) with ReLU activations, batch normalization, and dropout to prevent overfitting. The final layer employs softmax for multi‑class (or binary softmax for two‑class) classification, with categorical or binary cross‑entropy selected automatically based on the number of classes.

A standard dataset split was applied:

- 80% for training,

- 10% for validation,

- 10% for testing.

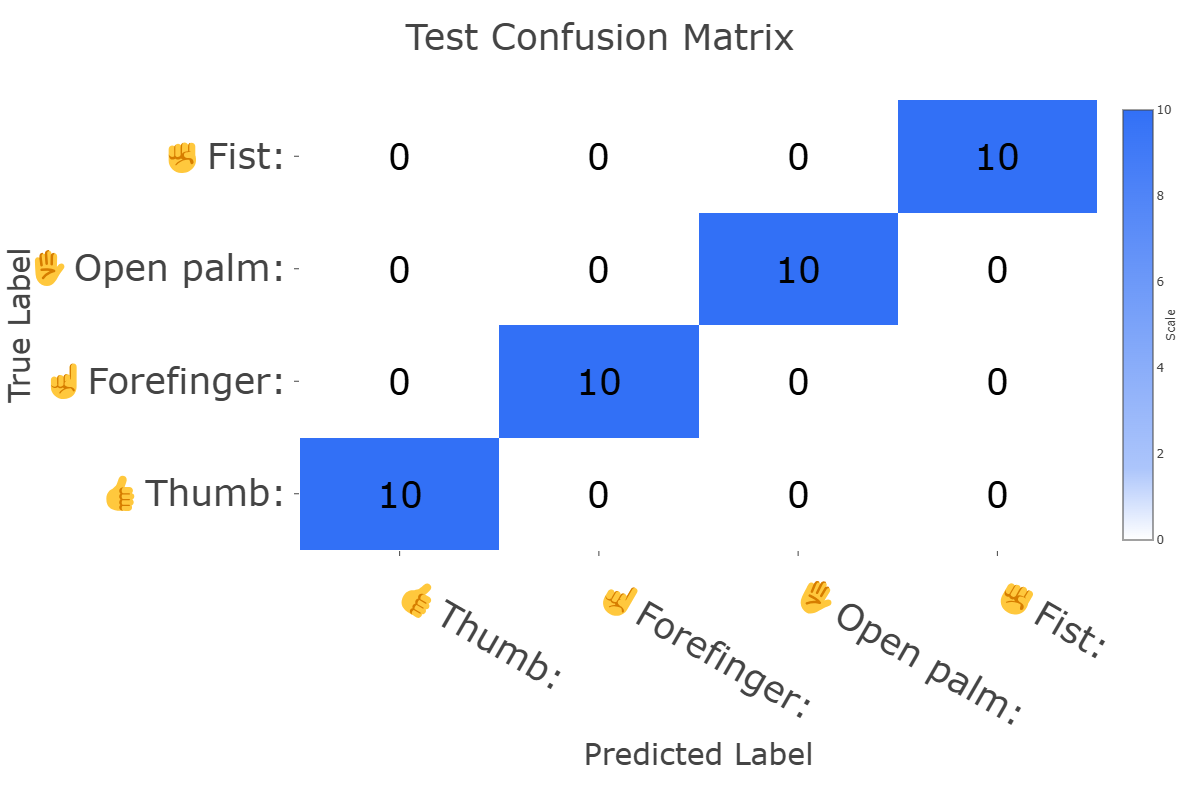

The validation dataset was used to monitor cross-entropy loss and accuracy during training. An automatic early stopping mechanism halted training if no improvement was observed after 10 epochs. The test dataset was used for final model evaluation, assessing: accuracy, loss, precision, recall, ROC AUC, confusion matrix.

The model was trained with the following preset hyperparameters:

- Batch size: 16

- Initial learning rate: 0.001

- Learning rate scheduler: Reduced learning rate by 5% per epoch

Model training, validation, and testing were performed on the “ML in Health Science” platform using the Image Classifier17 (version 2.25) and Video Classifier18 (version 1.0) applications.

Manual Test

To further evaluate real-time performance, 40 randomly selected sonograms (10% of the dataset), excluded from training, validation, and testing, along with four cine loops and real-time ultrasound scans were used for manual testing..

Statistical analysis

Numerical data collection and statistical analysis were performed using Microsoft Excel (Office 2016, USA).

Validation and Test

Early stopping was triggered after 32 epochs when the model achieved a test accuracy of 100% and a test loss (crossentropy) of 0.0067. The total training time, excluding image upload, was under 1 minute on an HP Pavilion x360 i5 laptop, which lacks a dedicated GPU and relies on integrated graphics.

Table 1 presents the performance metrics of the model, while Figures 3 and 4 show the confusion matrix, accuracy per epoch, and loss per epoch.

Table 1: Performance metrics of the model.

|

Metrics |

Thumb |

Forefinger |

Open Palm |

Fist |

Macro |

|

Test Precision |

100% |

100% |

100% |

100% |

100% |

|

Test Recall |

100% |

100% |

100% |

100% |

100% |

|

Test F1 score |

100% |

100% |

100% |

100% |

100% |

|

Test ROC AUC |

100% |

100% |

100% |

100% |

100% |

|

Training Accuracy |

|

|

|

|

100% |

|

Training Loss |

|

|

|

|

0.0049 |

|

Validation Accuracy |

|

|

|

|

100% |

|

Validation Loss |

|

|

|

|

0.0087 |

|

Test Accuracy |

|

|

|

|

100% |

|

Test Loss |

|

|

|

|

0.0067 |

The model achieved a test accuracy of 100%, with no misclassifications in the previously unseen test dataset.

Figure 3: Confusion matrix of the trained model.

Figure 4: Accuracy and loss per epoch during model training.

Manual Test

The trained model was evaluated using 40 independent sonograms, with the predicted hand positions distributed as follows:

- 10 thumb images: mean probability: 99.9% (SD ± 0.0)

- 10 forefinger images: mean probability: 92.0% (SD ± 8.6)

- 10 palm images: mean probability: 98.6% (SD ± 1.2)

- 10 fist images: mean probability: 99.9% (SD ± 0.0)

The model correctly classified all 40 cases, with 39 predictions exceeding 99.9% probability and only one open palm case at 95% probability.

When tested with B-mode cine loops and live ultrasound scanning, the model successfully performed real-time predictions with a 20 ms interval between predictions, achieving 50 predictions per second on the same device that was used for model training (Figure 2).

Discussion

This study demonstrates the ability of Web AI Vision to identify anatomic patterns associated with predefined hand positions using grayscale ultrasound images of the distal anterior forearm. The model successfully performed real-time classification, accurately predicting hand positions based on previously unseen sonograms, with no misclassifications.

Practical standpoint

B-mode ultrasound, enhanced by transfer learning and deployed as a Web AI model, has the potential to serve as a valuable tool for guiding prosthetic limbs and exoskeletons. Unlike traditional sensor-based systems such as electromyography or A-mode ultrasound, B-mode ultrasound provides a real-time anatomical assessment, offering significantly more detailed and dynamic information about limb movement.

By leveraging pretrained image classification models, the AI model can transfer prior knowledge from general-purpose datasets to the B-mode ultrasound domain, allowing for the development of a new model with minimal computational resources. This transfer learning approach does not require extensive computing power and can be executed in a web-based environment. Web AI image classification models can achieve high accuracy with relatively small datasets of B-mode images. Moreover, training and predictions can be performed entirely on the client side, meaning they can run on any device with web browser capabilities, including smartphones and tablets18. This ability to operate with low computational costs allows the same ultrasound scanner that controls the limb to be used for real-time model retraining.

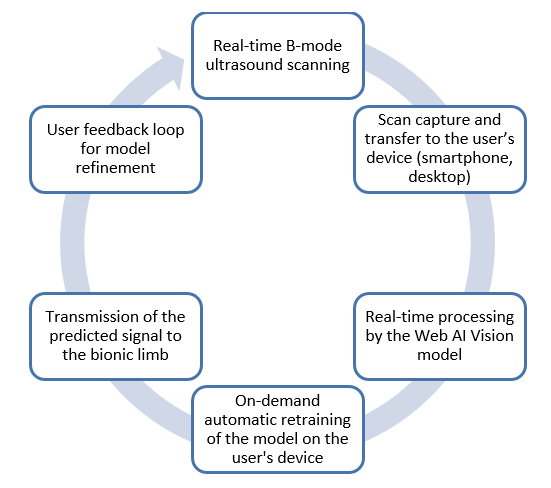

Patients can adjust and personalize their model by retraining it with new B-mode scans directly on their own device (smartphone or laptop), without requiring external computational resources or third-party services18. This approach makes the system not only cost-effective but also completely privacy-safe - an increasingly important factor given both official and unofficial recommendations regarding the ethical use of AI in healthcare. Ensuring on-device data processing aligns with emerging regulations and best practices for maintaining patient confidentiality and data sovereignty in AI-driven medical applications19 - Figure 5.

Figure 5: Flowchart illustrating the utilization of B-mode ultrasound and Web AI Vision for controlling bionic limbs.

The combination of B-mode ultrasound and Web AI-based transfer learning algorithms has the potential to revolutionize bionics and limb prosthetics, enabling patients not only to control their bionic limb but also to autonomously adjust and optimize it according to their individual needs and changing conditions.

Limitations

Despite its promising potential, this approach has several limitations:

1. Inter-Individual Variability and Limited Dataset: The model was trained on a single participant due to the highly individualized nature of B-mode ultrasound anatomy, which can vary significantly between individuals—based on factors such as age, gender, hydration status, muscle mass, and underlying conditions—as well as within the same individual over time. This inherent variability limits the generalizability of the model and makes extrapolation to other users challenging. Consequently, the dataset was intentionally kept limited to test whether meaningful classification could still be achieved under realistic, individualized conditions. Despite this constraint, the study demonstrated that accurate model performance is feasible using a small, personalized dataset, highlighting the potential of Web AI to enable client-side model training and retraining. This approach supports real-time personalization without the need for external services or extensive computational resources, and warrants further investigation into its usability by non-expert patients.

2. Healthy limb participant: As this was a basic research study, the model was trained using data from a healthy individual. Therefore, extrapolating the results to amputees and patients with musculoskeletal disorders should be done with caution. Nevertheless, the study demonstrates the feasibility of the method and warrants further research on specific patient cohorts.

3. Virtual bionic hand: This study demonstrated the feasibility of using Web AI and B-mode ultrasound to guide a virtual bionic hand. However, the use of a virtual model may not fully represent real-world performance due to potential differences in latency, mechanical response, and integration challenges. Additionally, this limitation makes it difficult to directly compare the proposed approach with currently established alternatives such as electromyography, A-mode ultrasound, and custom sensor systems. Further studies are required to evaluate the practical application of this method in controlling physical prosthetic or bionic limbs in real-time clinical scenarios, as well as to perform head-to-head comparisons with existing control modalities in terms of accuracy, latency, usability, and adaptability.

4. Ultrasound Image Acquisition Challenges: The effectiveness of the model depends on the quality of ultrasound images. This study used a professional ultrasound device, which may not reflect the imaging capabilities of currently available small portable ultrasound systems. Further research is needed to evaluate the feasibility of using low-power portable ultrasound devices for this application.

5. Holistic Image Classification Approach: This study employs a holistic image classification approach, which treats each ultrasound scan as a single input rather than analyzing specific anatomical regions. While complex image segmentation and region-specific recognition could potentially improve model accuracy, identifying the most relevant anatomic patterns remains a challenge. Additionally, segmentation-based approaches require longer training times and higher computational resources, limiting their feasibility in low-power Web AI environments.

6. Effects of Image Resizing on Model Performance: Image resizing is necessary to standardize input dimensions, but it may affect the model’s predictive accuracy. Proper image preprocessing techniques must be optimized for both training and real-time predictions to ensure model consistency.

Future Research for Real-World Implementation

Despite the promising results of this basic research study, further investigation is essential to enable the translation of this approach into real-life and clinical settings. Key areas for future research include:

- Application of this method to physical bionic and prosthetic limbs to assess real-time functional integration and latency.

- Utilization of portable and low-cost ultrasound devices to evaluate feasibility in non-specialized or home environments.

- Assessment of user interaction and model retraining by individuals without advanced expertise in computer science or medicine, to ensure accessibility and usability across patient populations.

Conclusion

This study demonstrated the ability of Web AI Vision to classify anatomical movement patterns in real-time B-mode ultrasound scans for controlling a virtual bionic limb. Such ultrasound- and Web AI-powered bionic limbs can be easily and automatically retrained and recalibrated in a privacy-safe manner on the client side, within a web environment, and without extensive computational costs. Using the same ultrasound scanner that controls the limb, patients can efficiently adjust the Web AI Vision model with new B-mode scans as needed, without relying on external services.

The advantages of this combination warrant further research into AI-powered muscle movement analysis and the utilization of ultrasound-powered Web AI in rehabilitation medicine, neuromuscular disease management, and advanced prosthetic control for amputees.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

Ethical approval: not applicable.

Guarantor: YR

Contributorship: YR: idea, building of the model and web application, draft of the manuscript. All authors: literature search, interpretation of data, editing of the manuscript, final approval of the manuscript.

Acknowledgements: Special thanks to Jason Mayes and the Web AI community for their support and for providing the opportunity to build upon TensorFlow.js.

References

1 United States. Wright Air Development Division. Directorate of Advanced Systems Technology. Bionics Symposium: Living Prototypes‐the Key to New Technology, 13-14-15 September 1960: Directorate of Advanced Systems Technology, Wright Air Development Division, Air Research and Development Command, U.S. Air Force; 1961.

2 Nazari V, Zheng Y-P. Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors (Basel). 2023;23(4). doi:10.3390/s23041885.

3 Zheng YP, Chan MMF, Shi J, Chen X, Huang QH. Sonomyography: monitoring morphological changes of forearm muscles in actions with the feasibility for the control of powered prosthesis. Med Eng Phys. 2006;28(5):405-415. doi:10.1016/j.medengphy.2005.07.012.

4 Kamatham AT, Alzamani M, Dockum A, Sikdar S, Mukherjee B. SonoMyoNet: A Convolutional Neural Network for Predicting Isometric Force from Highly Sparse Ultrasound Images. IEEE Trans Hum Mach Syst. 2024;54(3):317-324. doi:10.1109/THMS.2024.3389690.

5 Rajamani A, Arun Bharadwaj P, Hariharan S, et al. A historical timeline of the development and evolution of medical diagnostic ultrasonography. J Clin Ultrasound. 2024;52(9):1419-1437. doi:10.1002/jcu.23808.

6 Akhlaghi N, Baker CA, Lahlou M, et al. Real-Time Classification of Hand Motions Using Ultrasound Imaging of Forearm Muscles. IEEE Trans Biomed Eng. 2016;63(8):1687-1698. doi:10.1109/TBME.2015.2498124.

7 TensorFlow. TensorFlow.js is a library for machine learning in JavaScript. link. Accessed September 27, 2024.

8 Xu L, Mohammadi M. Brain tumor diagnosis from MRI based on Mobilenetv2 optimized by contracted fox optimization algorithm. Heliyon. 2024;10(1):e23866. doi:10.1016/j.heliyon.2023.e23866.

9 Velu S. An efficient, lightweight MobileNetV2-based fine-tuned model for COVID-19 detection using chest X-ray images. Math Biosci Eng. 2023;20(5):8400-8427. doi:10.3934/mbe.2023368.

10 Ogundokun RO, Li A, Babatunde RS, et al. Enhancing Skin Cancer Detection and Classification in Dermoscopic Images through Concatenated MobileNetV2 and Xception Models. Bioengineering (Basel). 2023;10(8). doi:10.3390/bioengineering10080979.

11 Ekmekyapar T, Taşcı B. Exemplar MobileNetV2-Based Artificial Intelligence for Robust and Accurate Diagnosis of Multiple Sclerosis. Diagnostics (Basel). 2023;13(19). doi:10.3390/diagnostics13193030.

12 ML in Health Science. Limb Salvage Prediction with Pedal Angiograms. link. Accessed October 1, 2024.

13 Huang C, Sarabi M, Ragab AE. MobileNet-V2 /IFHO model for Accurate Detection of early-stage diabetic retinopathy. Heliyon. 2024;10(17):e37293. doi:10.1016/j.heliyon.2024.e37293.

14 Rusinovich Y, Liashko V, Rusinovich V, et al. Limb salvage prediction in peripheral artery disease patients using angiographic computer vision. Vascular. 2025:17085381241312467. doi:10.1177/17085381241312467.

15 Rodrigues J, Santos-Faria D, Silva J, Azevedo S, Tavares-Costa J, Teixeira F. Sonoanatomy of anterior forearm muscles. J Ultrasound. 2019;22(3):401-405. doi:10.1007/s40477-019-00388-z.

17 ML in Health Science. Image Classifier. link. Accessed March 25, 2025.

18 ML in Health Science. Video Classifier. link. Accessed March 18, 2025.

19 Rusinovich Y, Vareiko A, Shestak N. Human-centered Evaluation of AI and ML Projects. Web3MLHS. 2024;1(2):d150224. doi:10.62487/ypqhkt57.

References

United States. Wright Air Development Division. Directorate of Advanced Systems Technology. Bionics Symposium: Living Prototypes‐the Key to New Technology, 13-14-15 September 1960: Directorate of Advanced Systems Technology, Wright Air Development Division, Air Research and Development Command, U.S. Air Force; 1961.

Nazari V, Zheng Y-P. Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors (Basel). 2023;23(4). doi:10.3390/s23041885. DOI: https://doi.org/10.3390/s23041885

Zheng YP, Chan MMF, Shi J, Chen X, Huang QH. Sonomyography: monitoring morphological changes of forearm muscles in actions with the feasibility for the control of powered prosthesis. Med Eng Phys. 2006;28(5):405-415. doi:10.1016/j.medengphy.2005.07.012. DOI: https://doi.org/10.1016/j.medengphy.2005.07.012

Kamatham AT, Alzamani M, Dockum A, Sikdar S, Mukherjee B. SonoMyoNet: A Convolutional Neural Network for Predicting Isometric Force from Highly Sparse Ultrasound Images. IEEE Trans Hum Mach Syst. 2024;54(3):317-324. doi:10.1109/THMS.2024.3389690. DOI: https://doi.org/10.1109/THMS.2024.3389690

Rajamani A, Arun Bharadwaj P, Hariharan S, et al. A historical timeline of the development and evolution of medical diagnostic ultrasonography. J Clin Ultrasound. 2024;52(9):1419-1437. doi:10.1002/jcu.23808. DOI: https://doi.org/10.1002/jcu.23808

Akhlaghi N, Baker CA, Lahlou M, et al. Real-Time Classification of Hand Motions Using Ultrasound Imaging of Forearm Muscles. IEEE Trans Biomed Eng. 2016;63(8):1687-1698. doi:10.1109/TBME.2015.2498124. DOI: https://doi.org/10.1109/TBME.2015.2498124

TensorFlow. TensorFlow.js is a library for machine learning in JavaScript. https://www.tensorflow.org/js. Accessed September 27, 2024.

Xu L, Mohammadi M. Brain tumor diagnosis from MRI based on Mobilenetv2 optimized by contracted fox optimization algorithm. Heliyon. 2024;10(1):e23866. doi:10.1016/j.heliyon.2023.e23866. DOI: https://doi.org/10.1016/j.heliyon.2023.e23866

Velu S. An efficient, lightweight MobileNetV2-based fine-tuned model for COVID-19 detection using chest X-ray images. Math Biosci Eng. 2023;20(5):8400-8427. doi:10.3934/mbe.2023368. DOI: https://doi.org/10.3934/mbe.2023368

Ogundokun RO, Li A, Babatunde RS, et al. Enhancing Skin Cancer Detection and Classification in Dermoscopic Images through Concatenated MobileNetV2 and Xception Models. Bioengineering (Basel). 2023;10(8). doi:10.3390/bioengineering10080979. DOI: https://doi.org/10.3390/bioengineering10080979

Ekmekyapar T, Taşcı B. Exemplar MobileNetV2-Based Artificial Intelligence for Robust and Accurate Diagnosis of Multiple Sclerosis. Diagnostics (Basel). 2023;13(19). doi:10.3390/diagnostics13193030. DOI: https://doi.org/10.3390/diagnostics13193030

ML in Health Science. Limb Salvage Prediction with Pedal Angiograms. https://mlhs.ink/amputate/. Accessed October 1, 2024.

Huang C, Sarabi M, Ragab AE. MobileNet-V2 /IFHO model for Accurate Detection of early-stage diabetic retinopathy. Heliyon. 2024;10(17):e37293. doi:10.1016/j.heliyon.2024.e37293. DOI: https://doi.org/10.1016/j.heliyon.2024.e37293

Rusinovich Y, Liashko V, Rusinovich V, et al. Limb salvage prediction in peripheral artery disease patients using angiographic computer vision. Vascular. 2025:17085381241312467. doi:10.1177/17085381241312467. DOI: https://doi.org/10.1177/17085381241312467

Rodrigues J, Santos-Faria D, Silva J, Azevedo S, Tavares-Costa J, Teixeira F. Sonoanatomy of anterior forearm muscles. J Ultrasound. 2019;22(3):401-405. doi:10.1007/s40477-019-00388-z. DOI: https://doi.org/10.1007/s40477-019-00388-z

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. 2018. doi:10.48550/arXiv.1801.04381. DOI: https://doi.org/10.1109/CVPR.2018.00474

ML in Health Science. Image Classifier. https://mlhs.ink/Playground/#SIC. Accessed March 25, 2025.

ML in Health Science. Video Classifier. https://mlhs.ink/Playground/#SVC. Accessed March 18, 2025.

Rusinovich Y, Vareiko A, Shestak N. Human-centered Evaluation of AI and ML Projects. Web3MLHS. 2024;1(2):d150224. doi:10.62487/ypqhkt57. DOI: https://doi.org/10.62487/ypqhkt57

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Yury Rusinovich, Volha Rusinovich, Markus Doss (Author)

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Add a Comment:

Comments:

Article views: 0