Do You Agree with AI Making Decisions About Your Treatment? A Comparative Survey of IT and Healthcare Practitioners

DOI:

https://doi.org/10.62487/m67cnn54Keywords:

Human-Centered AI, AI Supervision, Trust in AI, Human-AI Collaboration, AI Healthcare SurveyAbstract

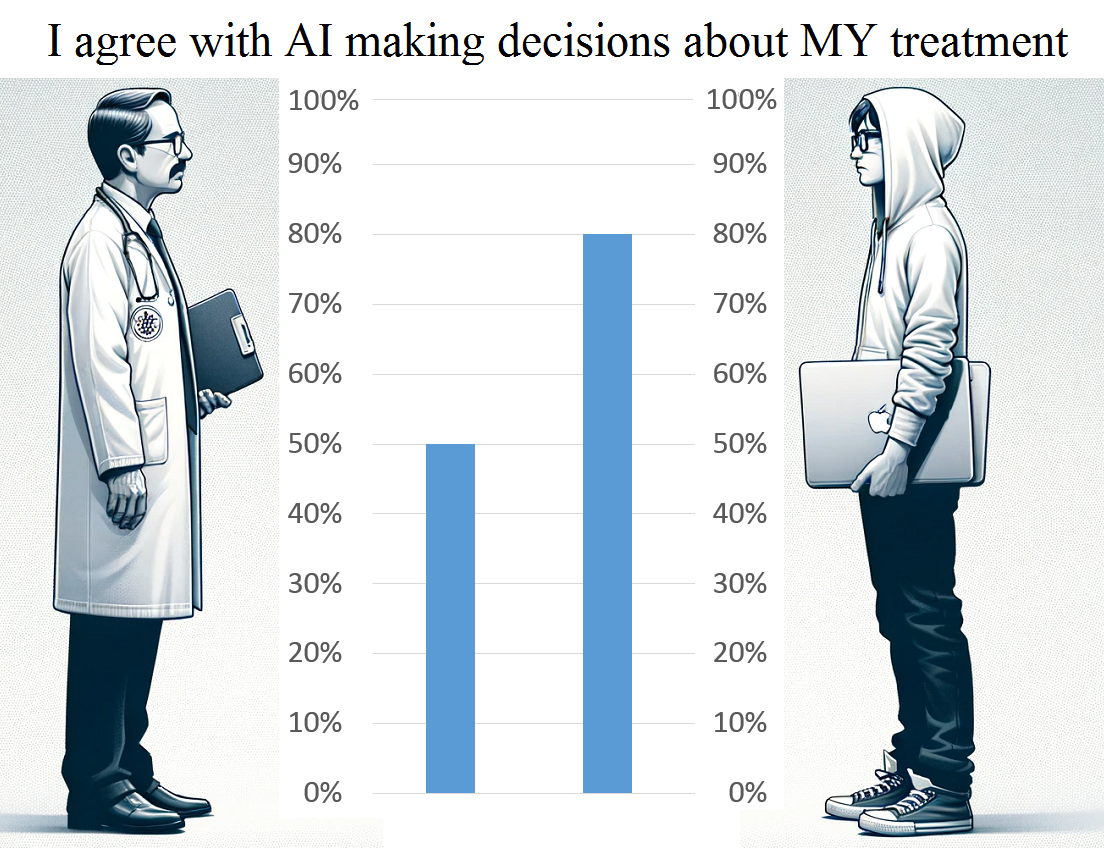

Aim: The primary aim of this study was to investigate and compare the opinions of professional groups responsible for the development of medical AI and ML models regarding the use of those models for their own treatment. Materials and Methods: This survey was conducted through a blend of private interviewing and anonymous online polling, utilizing platforms such as Telegram, LinkedIn, and Viber. The target audience comprised specific international groups, primarily Russian, German, and English-speaking, of healthcare and IT practitioners. These participants ranged in their levels of expertise and experience, from beginners to veterans. The survey centered on a singular, pivotal question: “Do you agree with AI making decisions about your treatment including diagnostics, surgeries, and medications?” Respondents had the option to choose from three responses: “Yes”, “Yes, if supervised by a doctor”, and “No”. Results: A total of 427 unique and verified individuals participated in this survey, comprising 226 IT and 201 healthcare practitioners. The survey results revealed a statistically significant (p-value < 0.0001) difference between the two groups. Over 50% of healthcare workers definitively answered “No” to the application of AI and ML algorithms in their own treatment. In contrast, IT practitioners demonstrated a higher level of trust in the healthcare system's integration with technology, with 70% expressing willingness to be treated by AI under the supervision of a doctor. Only 10% of respondents agree to the application of AI in their own treatment without human supervision. Conclusion: This study reveals a marked contrast in the level of trust between healthcare and IT practitioners regarding the application of AI and ML in their own treatment. Only 50% of healthcare workers express trust in AI, compared to 80% of IT practitioners. Notably, complete trust in AI-driven treatment without human supervision is exceedingly low in both groups, at less than 10%. In clinical settings, patients should be informed about AI applications in their diagnostic and treatment processes.

Do you agree with AI making decisions about YOUR treatment including diagnostics, surgeries, and medications?

Results:

Original Article

Background

Machine Learning (ML) and Artificial Intelligence (AI) in Health Science

In recent years, the application of AI and ML in healthcare settings has experienced a breakthrough. These technologies are now prevalent across various domains, including diagnostics and treatment processes. The application of these algorithms is currently not regulated. The available norms have only a recommendation approach1 2. Thus, AI and ML algorithms could currently be applied in clinical settings without separate patient consent.

Aim

The primary aim of this study was to investigate and compare the opinions of professional groups responsible for the development of medical AI and ML models regarding the use of those models for their own treatment.

Material and Methods

To achieve our aim, we conducted an online survey in January 2024. This survey was carried out using a combination of private interviews and anonymous online polls, leveraging platforms such as Telegram, LinkedIn, and Viber. The results and discussions from this survey are openly accessible on the official Telegram Channel of the ML in Health Science Initiative, which can be visited at: https://t.me/MLinHS

The target audience comprised specific international groups, primarily Russian, German, and English-speaking, of healthcare and IT practitioners. These participants ranged in their levels of expertise and experience, from beginners to veterans. The survey centered on a singular question: “Do you agree with AI making decisions about YOUR treatment including diagnostics, surgeries, and medications?” Respondents had the option to choose from three responses: “Yes”, “Yes, if supervised by a doctor”, and “No”. This methodology was crafted to succinctly yet profoundly gather insights into the perspectives of professionals either directly impacted by or involved in the integration of AI in healthcare, while ensuring the anonymity and confidentiality of the respondents.

Statistics

The data were analyzed with a two-tailed Fisher’s exact test. In the analysis, each response option – “Yes”, “Yes, if supervised by a doctor”, and “No” – was compared against the combined total of the other two options. For example, responses indicating “Yes” were compared to the aggregate of responses for “Yes, if supervised by a doctor” and “No”. This comparison was conducted using a 2x2 table format for each group to facilitate a clear understanding of the preferences within each category. A p-value of p<0.05 was considered statistically significant. Graphpad Quickcalcs (California, US) was used for the statistical analysis.

Results

A total of 427 unique and verified individuals participated in this survey, comprising 226 IT practitioners and 201 healthcare workers. The survey results revealed a statistically significant difference between the two groups. Over 50% of healthcare workers definitively answered “No” to the application of AI and ML algorithms in their own treatment. In contrast, IT specialists demonstrated a higher level of trust in the healthcare system's integration with technology, with 70% expressing willingness to be treated by AI under the supervision of a doctor. Table 1 and Figure 1 summarize the survey results.

|

Variable |

Healthcare Workers |

IT Practitioners |

P-value |

|

Yes |

13 |

20 |

0.37 |

|

Yes, if supervised by a doctor |

84 |

158 |

< 0.001 |

|

No |

104 |

48 |

< 0.001 |

|

Total |

201 |

226 |

|

Table 1: Survey Results.

Figure 1: Survey Results.

Interestingly, a substantial consensus was observed across both groups against undergoing treatment solely by AI or ML, with 92% of all respondents opposing this approach.

Discussion:

Practical standpoint

1. Contrast in Trust Levels: The survey revealed a significant contrast in trust levels between healthcare and IT practitioners concerning the use of AI and ML in medicine. Healthcare workers exhibited greater skepticism towards the application of AI and ML algorithms, whether with or without human supervision. Only 50% of these respondents expressed trust in this technology. In contrast, IT practitioners demonstrated more confidence in the healthcare system's integration with AI and ML, showing a readiness to be treated using these technologies, provided there is human oversight.

2. Healthcare Workers' Caution: The fact that more than 50% of healthcare workers are definitively opposed to AI and ML algorithms for their treatment suggests a cautious stance possibly rooted in their understanding of the complexities and nuances of medical care, which they may feel AI currently cannot fully comprehend or handle.

3. Broad Consensus on AI-only Treatment: The overwhelming consensus (92%) against treatment solely by AI or ML across both groups underscores a significant apprehension about removing the human element entirely from healthcare. This reflects concerns about ethical implications and the importance of human supervision in diagnostic and treatment processes with the application of AI models.

To the best of our knowledge, this study is the first to capture the opinions of life science specialists regarding the application of AI in their own medical treatment.

Limitations

The primary limitation of this study is the potential bias arising from the possible non-representativeness of the surveyed groups, attributable to the anonymous online methodology.

Conclusion

This study reveals a marked contrast in the level of trust between healthcare workers and IT practitioners regarding the application of AI and ML in their own treatment. Only 50% of healthcare workers express trust in AI, compared to 80% of IT practitioners. Notably, complete trust in AI-driven treatment without human supervision is exceedingly low in both groups, at less than 10%. In clinical settings, patients should be informed about AI applications in their diagnostic and treatment processes.

Conflict of Interest: The authors state that no conflict of interest exists.

Authorship: YR: Concept, data analysis, original draft. YR, VR AR: Survey. YR, VR: Review and editing.

Downloads

Published

Issue

Section

License

Copyright (c) 2024 Yury Rusinovich, Anhelina Rusinovich, Volha Rusinovich (Author)

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Add a Comment:

Comments:

Article views: 0